NEWS

TECH BLOG / May 31, 2023

Perceptually Optimized Encoding: Benefits, Possibilities, and Difficulties of Assessment with Current Quality Metrics

By Sergio Sanz-Rodríguez, PhD and Mauricio Alvarez-Mesa, PhD – Most modern optimized video encoders incorporate Perceptually Optimized Encoding (POE) modes which make encoding decisions that take into account some properties of the Human Visual System (HVS) in order to improve compression efficiency and perceived quality.

Traditional encoders without perceptual optimizations have been extensively studied in Rate-Distortion Optimization (RDO) theory and their compression efficiency has been assessed using the popular Peak Signal-to-Noise Ratio (PSNR).

It is well known that the PSNR metric does not correlate well with the quality as perceived by human viewers and, therefore, the distortion introduced by encoders is not properly quantified by this metric.

In recent years, several Image or Video Quality Assessment (IQA or VQA) metrics have been proposed with the stated objective of providing a better correlation between human perception and objective quality assessments when taking into account the distortion introduced by video encoding. Some of these metrics include: Structural SIMilarity Index (SSIM), Video Multi-method Assessment Function (VMAF), or Perceptually Weighted PSNR (XPSNR).

These metrics are very popular for evaluating traditional encoders without perceptual optimizations, but it is still unclear whether these metrics can be suitable to evaluate the quality yielded by the video encoder with POE enabled.

The goal of this technical blog is to present the main ideas and concepts behind perceptually optimized encoding, its benefits and possibilities, while at the same time highlight the difficulties of evaluating POE modes using currently available objective quality metrics.

1. Perceptually Optimized Encoding (POE)

In general, the objective of a video encoder is to comply with a certain bandwidth restriction at the best possible quality or to provide a certain video quality at the lowest bitrate possible. The encoder achieves these objectives by exploiting spatial, temporal, and statistical redundancies in the video data and by optimizing for a certain quality criteria. Regular, i.e. non-perceptually optimized encoders, commonly use optimization techniques that minimize errors (e.g. distortion) based on the Mean Squared Error (MSE) measure or its logarithmic form, the PSNR. RDO theory and encoder optimization techniques based on MSE are well understood and deployed.

However, it has been shown that the MSE and PSNR do not correlate well with the perception of quality by human viewers [1]. The consequences of optimizing encoders for the MSE are negative: higher bitrates than necessary and therefore higher system costs, or lower quality and therefore a negative experience for the end user [2].

The objective of a perceptually optimized encoder is to take into account the properties of the Human Visual System (HVS) in order to reduce the bitrate at equal perceived quality, or increase the perceived quality under a bitrate constraint (see Figure 1). For this purpose, the perceptually optimized encoder exploits the so-called psychovisual redundancies, as the HVS perceives pixel errors differently depending on many factors such as motion, brightness, spatial patterns, and viewing distance.

For simplicity, in this blog we will refer to the perceptual modes of video encoders as POE.

1.1. POE Techniques

Based on the framework proposed by Zhang et al. [3], a perceptually optimized encoder is composed of the following components:

- Visual Perception Model

- Quality Assessment Model

- Video Encoder Optimization

Visual Perception Model

In order to exploit psychovisual redundancies, the encoder requires a model of the HVS. The visual perception model is based on properties of the HVS such as acuity, luminance perception, color appearance, contrast sensitivity, temporal sensitivity, texture masking, motion aliasing, etc. This model can be derived mathematically from perceptual studies or be derived from data.

Visual Quality Assessment (VQA) Model

A metric or model that correlates well with human perception is needed in order to evaluate the impact of perceptual coding, or to optimize the perceptual coding process.

Video Encoder Optimization

By using the visual perception model, the encoder can be optimized to improve the quality perceived by human observers. Some common optimization techniques include:

- Perceptually optimized bit allocation and rate control: with the objective of allocating more bits to quality sensitive regions.

- Perceptual RDO: in order to guide the encoding optimization process, for example block partitioning or mode selection, based on perceptual criteria.

- Perceptually optimized transform and quantization: which use non-uniform quantization and custom quantization matrices to increase compression while maintaining the same visual quality.

- Perceptually optimized (preprocessing) filtering: which applies certain image or video filters prior to compression with the objective of improving perceived quality.

There are two main ways of using perceptual models for improving video coding:

- To guide the perceptual coding, such as bit allocation, mode decision, quantization, and filtering; and separately to evaluate their impact using a VQA metric.

- To use a perceptual VQA metric directly as a measure of distortion as part of the encoder RDO process.

1.2. POE Implementations

The majority of industrial encoders include HVS-based POE modes to minimize the perceptibility of coding errors. In this way, those regions of the picture exhibiting high sensitivity to perceived distortions will be encoded with higher quality (more bits). The redistribution of bits in the image is carried out using the Quantization Parameter Adaptation (QPA) method, also called adaptive quantization in the literature, in which the QP is regulated on a block-by-block basis. Many open-source encoders rely on QPA for perceptual bit allocation: x264 [4], x265 [5], SVT-AV1 [6], and VVenC [7]. The SVT-HEVC encoder seems to utilize, according to the documentation, adaptive quantization differently to improve the sharpness of the background [8].

The other common POE approach consists of modifying the RDO process to rely on a VQA metric or model that is better correlated with human perception than MSE. The well-known x264 and x265 encoders include SSIM-based and psychovisual tuning modes as well as others tailored to better encode different types of content (animation, film grain). The rav1e [9] and SVT-AV1 encoders also include psychovisual tuning modes; the latter still utilizes the MSE distortion metric for RDO but performs other optimizations for better preservation of details [10]. The libaom AV1 encoder features a VMAF-based tuning mode, as well as those for PSNR and SSIM maximization [11].

In the case of VVenC, the encoder uses a perceptual VQA metric, in this case the Perceptually Weighted PSNR (XPSNR) that we will describe later, as a measure of distortion for the encoder RDO, and for guiding the bit allocation process using QPA [12].

A third approach uses custom frequency-dependent quantization matrices based on the contrast sensitivity properties of the HVS for bitrate reduction without affecting visual quality. More information about this method is found in [13].

The same goal is also pursued by a fourth approach based on perceptually optimized filtering. In this POE method, the transform coefficients are low-pass filtered before quantization based on the HVS properties. Some encoders that use this strategy are the following: SVT-HEVC [14], and AWS Elemental [15]. The latter also uses a temporal domain filter which is combined with the transform domain filter.

The result of these methods is an overall improved visual quality compared to the non-POE version, especially at low bandwidth where the bit budget per frame is quite limited.

1.3. POE in Spin Digital’s Live Encoders

Spin Digital has implemented a POE mode for its HEVC and VVC encoders (Spin Enc Live) [16]. The encoder is based on a mathematical model of visual perception that guides the bit allocation, transformation, and quantization processes.

As the encoders are designed for Ultra High Definition (4K and 8K) live applications, the HVS model has been extensively optimized for real-time operation at large resolutions and frame rates.

The POE model has been validated for real-time operation up to 8K (7680×4320 pixels), 60 fps, 10-bit video, when running, together with the encoder, on a dual socket server with 2x 38 CPU cores [17].

1.4. Summary of POE Modes in Open-source and Industrial Encoders

The table below shows the command-line options to be used to enable or disable the POE modes of some of the most popular open-source encoders and the Spin Digital HEVC and VVC encoders.

| Encoder | Disabling POE | POE options | Comments on POE |

|---|---|---|---|

| x264 | --tune psnr/ssim | --aq-mode 3 --psy-rd rdo:trellis | Adaptive quantization Psychovisually optimized RDO |

| x265 | --tune psnr/ssim | --aq-mode 1 --psy-rd 2.0 --psy-rdoq 1.0 | Adaptive quantization Psychovisually optimized RDO |

| SVT-HEVC | -brr 0 -sharp 0 | -brr 1 -sharp 1 | Bitrate reduction by low-pass filtering of the transform coefficients Improved background sharpness using adaptive quantization |

| SVT-AV1 | -aq-mode 0 -tune 1 (PSNR) | -aq-mode 2 -tune 0 (visual quality) | Adaptive quantization: always turned on when rate control is enabled MSE-based RDO with optimizations for better detail preservation |

| VVenC | --PerceptQPA=0 | --PerceptQPA=1 | Perceptual QP adaptation and XPSNR-based RDO |

| Spin Digital HEVC/VVC | --percept 0 | --percept 1,2 | Perceptual QP adaptation and custom quantization |

2. Evaluating Encoders: Video Quality Assessment Metrics

The distortion introduced by a video encoder can be measured using either subjective quality metrics or objective quality metrics.

2.1. Subjective Quality Assessment Metrics

Subjective quality metrics are derived from human judgments, who are asked to assess the perceived quality of an impaired video compared to its reference (see Figure 2). The most commonly used measurement is the so-called Differential Mean Opinion Score (DMOS). Official subjective tests following standardized recommendations, such as ITU-R BT.500 [18], require a lot of logistic effort, time and resources for collecting a representative number of test subjects and preparing test sessions.

2.2. Objective Quality Assessment Metrics

In contrast, objective quality metrics aim to quantify the distortion between the original video and compressed video based on a mathematical model (see Figure 3). The most popular methods to compute the distortion introduced by a video encoder are: PSNR, (MS)-SSIM, and VMAF.

Peak Signal-to-Noise Ratio (PSNR)

It is computed as the ratio between the maximum possible signal value and the energy or the error measured as MSE. This ratio is then expressed in logarithmic form (dB).

Multiscale (MS)-Structural SIMilarity (SSIM)

The SSIM index relies on the fact that the Human Visual System (HVS) is able to extract from a picture the structural information of its object. SSIM focuses on measuring the distortion that a system generates on the structural information of the objects. MS-SSIM [19] is an extension of the original metric that provides more flexibility by taking into account the variations of viewing conditions.

Video Multi-method Assessment Function (VMAF)

The aim of the VMAF metric [20] is to predict the perceived quality of compressed videos with a Support Vector Machine (SVM) regressor that combines several “elementary” quality metrics into a single score. Similarly to MOS, the VMAF score goes from 0 (poorest quality) to 100 (highest quality). VMAF is considered the most popular category of objective metrics that claim to be correlated with human perception. However, it presents some drawbacks that are listed next:

- It was originally trained with Full HD videos and later extended to 4K; in principle, it cannot be used to evaluate videos with a resolution higher than 4K (e.g. 8K)

- It was not trained with HDR and Wide Color Gamut videos, only SDR BT.709

- It was trained with videos encoded in H.264 only [21]

- It does not take into account the chroma information of the video, only luma

Despite these limitations, VMAF is still being used to evaluate codecs based on HEVC, AV1, and VVC, with video sequences beyond 4K or in HDR.

Other interesting objective quality metrics are described below:

Perceptually Weighted PSNR (XPSNR)

XPSNR [22] is an extension of the traditional PSNR metric that aims to calculate quality scores that are more correlated to human perception. XPSNR relies on a model of the HVS that analyzes the video spatially and temporally to detect areas in the picture that can be more sensitive to visible distortions.

Recommendation ITU-T P.1204.3

P.1204.3 [23] describes a bitstream-based video quality model for monitoring the perceived quality of bitstreams. The model belongs to the no-reference category, which extracts features directly from the bitstream and generates a predicted MOS score ranging from “0” (poor) to “5” (excellent). The metric has been designed to support H.264, HEVC, and VP9, and videos of up to 4K resolution. It is also claimed to outperform well-known full-reference metrics such as PSNR, SSIM, and VMAF in terms of correlation with MOS [24]. The open-source reference implementation of P.1204.3 is available online [25].

3. Quality Assessment of Video Encoders with POE Modes

Traditionally, the assessment of the compression efficiency of video encoders is performed by configuring them assuming a specific use case (e.g., broadcasting, VoD) and tuning them for PSNR maximization. This method has been used in the major recent works on codec comparison: [17], [26], [27], [28], [29], [30].

However, there is also growing interest in the media industry in evaluating video encoders with their POE modes activated. To perform such an analysis, it is crucial to first find out whether the above mentioned objective quality metrics are really effective for this task.

3.1. Experimental setup

At Spin Digital we evaluated the compression efficiency of the POE modes of our two live software encoders, Spin Digital HEVC v2.0 and Spin Digital VVC v2.0, and the following four open-source software encoders: x265 v3.5, SVT-HEVC v1.5.1, SVT-AV1 v1.5.0, and VVenC v1.8.0. The video encoders were configured as follows: open Group of Pictures (GOP), random-access mode (long GOP), 1-second intra period, and Constant Bitrate (CBR) control with a 1-second buffer, except for SVT-AV1 and VVenC which only supports unconstrained Variable Bitrate (VBR) control when using random-access mode. Other encoding parameters, such as GOP size, GOP structure, and lookahead window, were kept at their default values.

In the experiments, a total of 12 4K 10-bit video clips of 1 minute long each were encoded at different bitrates ranging from 6 Mbps to 25 Mbps.

The Bjøntegaard Delta (BD)-rate method [31], [32] was used to compute the compression efficiency of the encoders. This method computes the average bitrate increase produced by a test encoder referred to a baseline encoder at the same quality.

3.2. Experimental Results

Table 2 shows the BD-rate results based on different objective quality metrics (PSNR, XPSNR, MS-SSIM, VMAF, and P.1204.3) produced by the six encoders with their POE modes enabled relative to the non-POE modes (PSNR tuned).

| Encoder - preset | PSNR BD-rate [%] | XPSNR BD-rate [%] | MS-SSIM BD-rate [%] | VMAF BD-rate [%] | P.1204.3 BD-rate [%] |

|---|---|---|---|---|---|

| x265 - medium | 15.51 | 10.42 | 10.18 | 7.40 | 9.46 |

| SVT-HEVC - 7 | 25.08 | 19.87 | 13.44 | 26.35 | -2.09 |

| SVT-AV1 - 8 | 15.79 | 14.72 | 11.73 | 5.23 | - |

| VVenC - faster | 11.59 | -5.22 | -1.03 | 17.12 | - |

| Spin Digital HEVC - balanced | 16.13 | 8.77 | 10.61 | 18.37 | -4.79 |

| Spin Digital VVC - balanced | 6.69 | 13.06 | 5.79 | 9.52 | - |

As can be seen in the table, the POE modes cause BD-rate losses for all the evaluated metrics, with the following exceptions that are analyzed next:

- The POE mode of VVenC produces an XPSNR BD-rate savings of 5.22%, which can be expected since this encoder relies on the XPSNR visual model to perform QPA. MS-SSIM also detects a (slight) gain, but VMAF a 17.12% loss.

- The POE modes of Spin Digital HEVC and SVT-HEVC achieve BD-rate gains according to the P.1204.3 metric. Nevertheless, these results might be inconclusive, since the P.1204.3 model was not designed to evaluate encoders with POE enabled, especially when using QPA. In this model, frame-level features are extracted from the bitstream, but bit and distortion distributions within the image are not taken into account to make MOS predictions.

3.3. Subjective Results

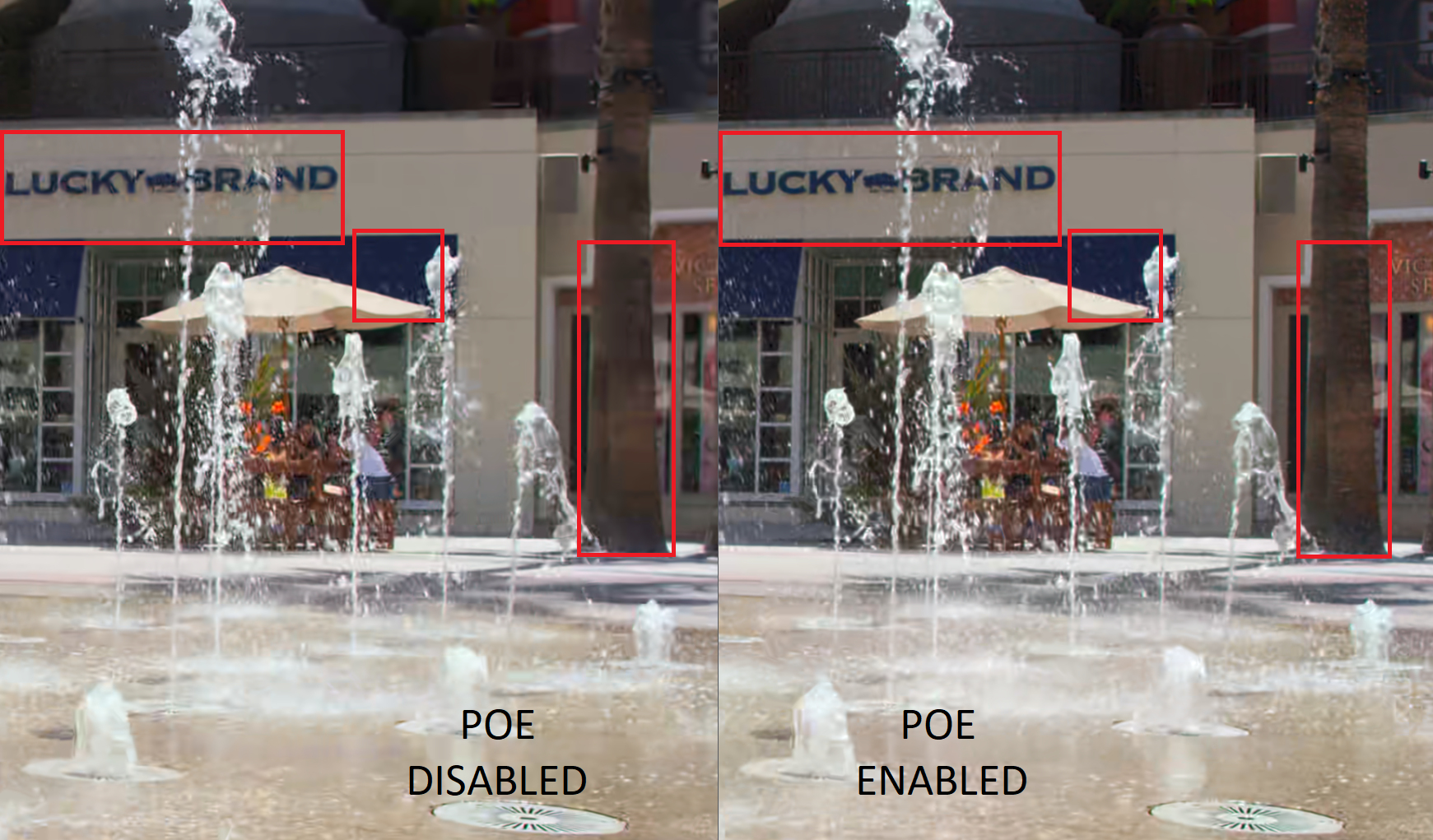

Although most of the metrics mentioned above report quality losses when using the POE modes of the encoders, informal subjective tests have demonstrated the opposite. The figure below shows a visual comparison between Spin Digital VVC’s POE and PSNR-tuned modes for the Netflix sequence called ToddlerFountain. As can be seen, the POE mode is able to preserve detail better.

4. Discussion

Based on the previous analysis and results, we present some recommendations for video encoder comparison and quality analysis:

When making encoder comparisons, it is recommended to disable the POE modes and use multiple VQA metrics. Enabling POE modes leads to mixed results (some encoders are penalized more than others).

To evaluate the impact of POE modes, subjective tests are needed. There are several studies that evaluate multiple codecs for different resolutions including 4K and 8K, in objective and subjective tests, using mostly the reference software implementations, but sometimes optimized implementations, but in all cases the encoders are configured without POE modes (e.g. [26] to [30]). This is fine for codec comparison (as mentioned in the previous point), but it leaves the main question about the impact of POE modes unanswered. We invite codec and VQA model researchers to perform subjective tests that assess the impact of POE. There are some indicative studies about the impact of POE modes such as the one performed for the validation of the VVC standard [33]. In this study, the comparison is not directly POE versus non-POE, but some indirect comparison of the VVC reference encoder (VTM), which has no POE, with the optimized VVenC encoder with POE enabled, where the baseline encoder is the HEVC reference encoder (HM). From the reported results, it can be deduced that the POE mode of VVenC achieves a MOS BD-rate savings of 11.40% over its non-POE mode.

In the industry, when performing codec comparisons and encoder settings recommendations, sometimes POE is enabled and sometimes not, creating inconclusive and confusing results.

We also encourage the industry to collaborate on open and/or standardized VQA metrics that can be used to understand the impact of POE on the final quality. Otherwise there is no way to quantify and compare results.

“The search for a universal objective measure of image quality will fascinate researchers for a number of years to come. Until this search has come to a successful end, we will have to include a subjective evaluation of image quality in the design of image communication systems”, by Professor Bernd Girod (1993).

5. Conclusion

Perceptually Optimized Encoding is used in most modern encoders for improving perceived quality and further reducing bitrate. Depending on the encoder implementation different techniques are used: perceptually optimized bit allocation and rate control, perceptual RDO, perceptually optimized transform and quantization, and perceptually optimized preprocessing filters.

This tech blog has shown that the use of the most popular objective quality metrics to compare the compression efficiency of POE-enabled encoders might lead to inconclusive results. One would expect that some of these metrics would be able to detect the subjective improvements provided by these POE modes compared to the non-POE modes (PSNR tuned), but in most cases they exhibited BD-rate losses. In addition, the comparison results can be biased if some of the POE modes are designed to maximize one of the metrics that are claimed to be correlated with human perception, e.g., XPSNR.

A reliable metric to compare encoders with POE enabled still remains the DMOS, but conducting a formal subjective test for each codec assessment round is time consuming and logistically challenging.

When performing encoder comparisons, for now, we recommend disabling POE modes. When using encoders in production POE modes can be enabled after careful analysis of their impact on different types of content. For the time being, we conclude that POE modes improve perceived quality and help increase compression efficiency, but we do not have a reliable method of assessing those improvements.

As influential researcher and Stanford professor Bernd Girod pointed out in 1993 (30 years ago): “The search for a universal objective measure of image quality will fascinate researchers for a number of years to come. Until this search has come to a successful end, we will have to include a subjective evaluation of image quality in the design of image communication systems” [1].

References

[1] B. Girod, “What’s Wrong with Mean-Squared Error?,” Digital Images and Human Vision, MIT Press, pp. 207-220, Cambridge, MA,1993: https://web.stanford.edu/~bgirod/pdfs/B93_1.pdf

[2] H. R. Wu and K.R. Rao, “Digital Video Image Quality and Perceptual Coding (1st Edition),” CRC Press, 2006. https://doi.org/10.1201/9781420027822

[3] Y. Zhang, Z.. Linwei, G. Jiang, S. Kwong, and C.C. Jay Kuo. “A Survey on Perceptually Optimized Video Coding.” ACM Comput. Surv. 00, 0, Article 11, pp. 1 – 37, August 2022: https://doi.org/10.48550/arXiv.2112.12284

[4] FFmpeg, “FFmpeg Codecs Documentation,” FFmpeg.org, 2023: https://www.ffmpeg.org/ffmpeg-codecs.html

[5] Multicoreware, “x265 Documentation,” x265 Website, 2014: https://x265.readthedocs.io/en/master/

[6] Intel and Netflix, “Scalable Video Technology for AV1 (SVT-AV1 Encoder and Decoder),” Open Visual Cloud Github, 2022: https://gitlab.com/AOMediaCodec/SVT-AV1

[7] J. Brandenburg, A. Wieckowski, T. Hinz, I. Zupancic, and Benjamin Bross, “VVenC Fraunhofer Versatile Video Encoder,” Fraunhofer HHI Website, 2023: https://www.hhi.fraunhofer.de/en/departments/vca/technologies-and-solutions/h266-vvc/fraunhofer-versatile-video-encoder-vvenc.html

[8] Intel, “Scalable Video Technology for HEVC Encoder (SVT-HEVC Encoder),” Open Visual Cloud Github, 2022: https://github.com/OpenVisualCloud/SVT-HEVC

[9] Xiph.org, “rav1e Encoder Documentation,” GitHub – Xiph, 2023: https://github.com/xiph/rav1e/blob/master/doc/RDO.md

[10] SVT-AV1, “VQ Tunning Mode Being Added to SVT-AV1,” Reddit Website, 2022: https://www.reddit.com/r/AV1/comments/sxw8be/vq_tuning_mode_being_added_to_svtav1/

[11] Alliance Open Media, “AV1 Codec Library,” AOM Google Git, 2023: https://aomedia.googlesource.com/aom/

[12] C. R. Helmrich, S. Bosse, M. Siekmann, H. Schwarz, D. Marpe and T. Wiegand, “Perceptually Optimized Bit-Allocation and Associated Distortion Measure for Block-Based Image or Video Coding,” 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 2019, pp. 172-181: https://doi.org/10.1109/DCC.2019.00025

[13] D. Grois and A. Giladi, “HVS-based Perceptual Quantization Matrices for HDR HEVC Video Coding for Mobile Devices,” IBC Technical Papers, 2020: https://www.ibc.org/technical-papers/hvs-based-perceptual-quantization-matrices-for-hdr-hevc-video-coding-for-mobile-devices/6733.article

[14] Intel, “Scalable Video Technology for the Visual Cloud,” White Paper – Media and Communications – Visual Content and Experiences, 2019: https://www.intel.com/content/dam/develop/external/us/en/documents/scalable-video-technology-aws-wp.pdf

[15] R. Khsib, “Perceptually Motivated Compression Efficiency for Live Encoders,” ACM Mile-High Video (MHV) 2023: Amazon Technical Session, 2023.

[16] Spin Digital, “8K HEVC and VVC Real-time Encoder (Spin Enc Live),” Spin Digital Products, Feb. 2023: https://spin-digital.com/products/spin_enc_live/

[17] Spin Digital, “Whitepaper: A VVC/H.266 Real-time Software Encoder for UHD Live Video Applications,” Spin Digital News and Tech Blog, Feb 17. 2023: https://spin-digital.com/tech-blog/whitepaper-real-time-vvc-uhd-encoder-v2/

[18] ITU-R, “Methodologies for the Subjective Assessment of the Quality of Television Images,” Recommendation ITU-R BT.500-14, Oct. 2019: https://www.itu.int/rec/R-REC-BT.500

[19] Z. Wang, E. P. Simoncelli, and Alan C. Bovik, “Multiscale structural similarity for image quality assessment,” The Thirty-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, Pacific Grove, CA, USA, 2003, pp. 1398-1402 Vol.2, doi: 10.1109/ACSSC.2003.1292216: https://ieeexplore.ieee.org/document/1292216

[20] Netflix, “Netflix/vmaf: Perceptual Video Quality Assessment Based on Multi-method Fusion,” Netflix GitHub, 2021: https://github.com/Netflix/vmaf

[21] Z. Li, “A VMAF Model for 4K,” VQEG Shared Files – VQEG Madrid, 2018: https://vqeg.org/VQEGSharedFiles/MeetingFiles/2018_03_Nokia_Spain/VQEG_SAM_2018_025_VMAF_4K.pdf

[22] C. Helmrich, “A Filter Plug-in Adding Support for Extended Perceptually Weighted Peak Signal-to-Noise Ratio (XPSNR) Measurements in FFmpeg,” GitHub – Fraunhofer HHI, 2021: https://github.com/fraunhoferhhi/xpsnr

[23] ITU-T, “Video Quality Assessment of Streaming Services over Reliable Transport for Resolutions up to 4K with Access to Full Bitstream Information,” Recommendation ITU-T P.1204.3, Jan. 2020: https://www.itu.int/rec/T-REC-P.1204.3

[24] A. Raake et al., “Multi-Model Standard for Bitstream-, Pixel-Based and Hybrid Video Quality Assessment of UHD/4K: ITU-T P.1204,” in IEEE Access, vol. 8, pp. 193020-193049, 2020, doi: 10.1109/ACCESS.2020.3032080: https://ieeexplore.ieee.org/document/9234526

[25] S. Göring, “Open source reference implementation of ITU-T P.1204.3,” GitHub – Telecommunication Telemedia Assessment, 2022: https://github.com/Telecommunication-Telemedia-Assessment/bitstream_mode3_p1204_3

[26] T. Nguyen and D. Marpe, “Compression efficiency analysis of AV1, VVC, and HEVC for random access applications,” APSIPA Transactions on Signal and Information Processing, 10, E11, July 13, 2021.

[27] D. Grois, A. Giladi, K. Choi, M. W. Park, Y. Piao, M. Park, and K. P. Choi, “Performance Comparison of Emerging EVC and VVC Video Coding Standards with HEVC and AV1,” in SMPTE Motion Imaging Journal, vol. 130, no. 4, pp. 1-12, May 2021.

[28] W. Ping-Hao, I. Katsavounidis, Z. Lei, D. Ronca, H. Tmar, O. Abdelkafi, C. Cheung, F. Ben Amara, and F. Kossentini, “Towards much better SVT-AV1 quality-cycles tradeoffs for VOD applications,” Proceedings SPIE 11842, Aug. 2021.

[29] C. Bonnineau, W. Hamidouche, J. Fournier, N. Sidaty, J.-F. Travers, and O. Deforges, “Perceptual Quality Assessment of HEVC and VVC Standards for 8K Video,” IEEE Transactions on Broadcasting, Sep. 2021.

[30] T. Laude, Y. Adhisantoso, J. Voges, M. Munderloh, and J. Ostermann, “A Comprehensive Video Codec Comparison”. APSIPA Transactions on Signal and Information Processing, 8, E30. doi:10.1017/ATSIP.2019.23.

[31] G. Bjontegaard, “Calculation of Average PSNR Differences between RD curves”, ITU-T SG16/Q6 VCEG 13th meeting, Austin, Texas, USA, April 2001, Doc. VCEG-M33: http://wftp3.itu.int/av-arch/video-site/0104_Aus/

[32] G. Bjontegaard, “Improvements of the BD-PSNR model”, ITU-T SG16/Q6 VCEG 35th meeting, Berlin, Germany, 16–18 July, 2008, Doc. VCEG-AI11: http://wftp3.itu.int/av-arch/video-site/0807_Ber/

[33] C. Helmrich, B. Bross, J. Pfaff, H. Schwarz, D. Marpe, and T. Wiegand, “Information on and Analysis of the VVC Encoders in the SDR UHD Verification Test,” Input document to JVET – JVET-T0103. Oct 2020: https://jvet-experts.org/doc_end_user/documents/20_Teleconference/wg11/JVET-T0103-v1.zip